Product

Trust & Legal

Published on

· 5 min read

How we solved the challenge of serving optimized images at scale while maintaining backward compatibility and performance.

Image optimization often has very little to do with the images themselves—and everything to do with how you architect the system around them.

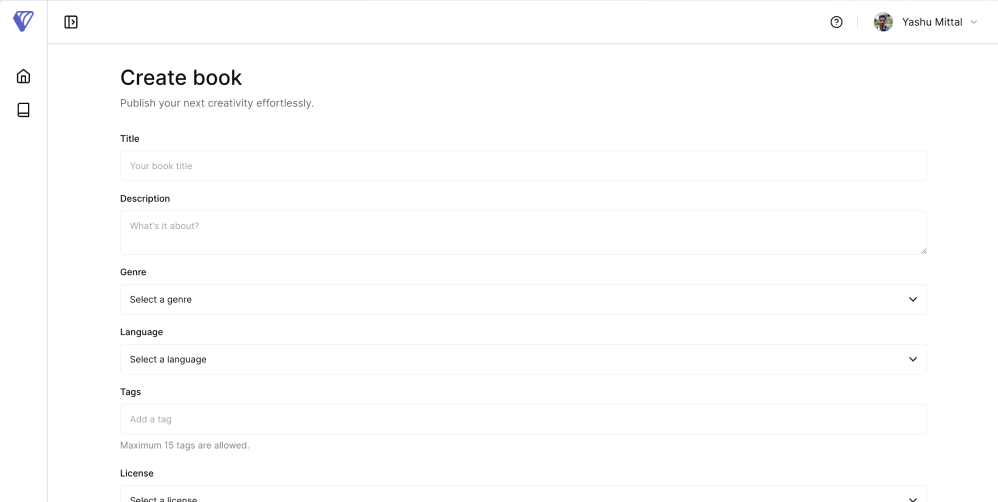

On Verbals, every book can have one active cover image, and authors upload these through creator dashboard, and they often choose high-quality, uncompressed image.

Initially, we were serving the original uploaded image everywhere—on the home feed, book detail page, author profile, library, and beyond.

This approach was straightforward and worked fine functionally - but it didn't scale. Serving high-res images across the board meant heavy payloads, slow page loads, and unnecessary bandwidth usage—especially painful on mobile devices or slow internet connections. A 150KB thumbnail shouldn’t need to fetch a 3MB RAW file just to render in a list.

We needed to fix this without breaking all the existing data and without introducing complexity for authors or readers. That’s what led us to build a more intelligent, scalable, and backward-compatible image handling system.

Modern apps need flexibility in how they serve images in multiple formats and sizes: low-quality image placeholders (LQIP) for instant loading, small thumbnails for lists, medium-sized images for cards, and full-resolution versions for detail views.

A basic solution is to generate fixed variants at specific sizes (10px, 150px, 350px), but six months later, you need to add a 600px variant for a new feature. Suddenly, all your existing images are "broken", you either backfill every single image, or you clutter your codebase with fallback logic.

We wanted something better. Flexible. Forward-compatible. And smart.

We broke the solution into three main components:

Once the author uploads the cover image, a message is enqueued into the topic for our image processing service to compress the image into different variants in the background.

interface ImageVariant {

width: number;

url: string;

fileSize: number;

createdAt: Date;

}

interface ProcessingConfig {

targetWidths: number[];

quality: number;

format: 'jpeg' | 'webp' | 'avif';

}

class ImageProcessor {

async processImageVariants(

originalBuffer: Buffer,

config: ProcessingConfig

): Promise<ImageVariant[]> {

return Promise.all(

config.targetWidths.map(async (width) => {

const resizedBuffer = await this.resizeImage(originalBuffer, width, config);

const uploadResult = await this.uploadToStorage(resizedBuffer, width);

return {

width,

url: uploadResult.url,

fileSize: resizedBuffer.length,

createdAt: new Date(),

};

})

);

}

}

The key here is treating image processing as a configurable pipeline rather than a fixed set of operations. This allows us to easily adjust variants without touching the core logic.

Instead of mapping the cover image variants with exact width, we implemented a scoring system that picks the most suitable image for each case.

interface SelectionStrategy {

target: number;

preference: 'prefer_smaller' | 'prefer_larger' | 'closest';

maxAcceptableRatio: number;

}

function calculateImageScore(

imageWidth: number,

target: number,

strategy: SelectionStrategy

): number {

const ratio = imageWidth / target;

// Base score with logarithmic distance penalty

let score = 100 - (Math.log(Math.abs(ratio - 1) + 1) * 20);

// Strategy-specific adjustments

switch (strategy.preference) {

case 'prefer_smaller':

score += ratio < 1 ? 10 : 0;

score -= ratio > 2 ? 30 : 0;

break;

case 'prefer_larger':

score += ratio >= 1 && ratio <= 1.5 ? 15 : 0;

score -= ratio < 0.7 ? 25 : 0;

break;

}

return Math.max(0, score);

}

The logarithmic penalty function reflects how humans perceive image quality differences. A 140px image looks nearly identical to a 150px image, but a 75px image looks significantly worse. Linear penalties don’t reflect this well. A log penalty gives us better control over perceived image quality.

We exposed this through a clean GraphQL interface that abstracts the complexity:

type CoverImageVariant {

url(size: ImageSize!): String

}

enum ImageSize {

LQIP

SMALL

MEDIUM

LARGE

}

type Book {

id: ID!

title: String!

coverImage: CoverImageVariant

}

Here’s how we resolve the image request using our selection strategy:

const resolvers = {

CoverImageVariant: {

url: (parent: ImageVariant[], args: { size: ImageSize }) => {

const config = IMAGE_SELECTION_CONFIG[args.size];

if (!config) return null;

const availableImages = parent.filter(img => img.width && img.url);

return selectOptimalImage(availableImages, config)?.url || null;

}

}

};

The frontend application does not have to worry about variants or scoring—just request the size, and the best-fit image is returned.

Over time, variants pile up—old versions, unused images, outdated formats. We built a cleanup job that runs periodically and does the following:

class ImageCleanupService {

async cleanupStaleImages(): Promise<void> {

// Remove orphaned images (no longer referenced)

const orphanedImages = await this.findOrphanedImages();

await this.removeFromStorage(orphanedImages);

// Remove duplicate variants (same width, different upload times)

const duplicates = await this.findDuplicateVariants();

await this.removeDuplicates(duplicates);

// Archive old variants when new processing standards are applied

await this.archiveOutdatedVariants();

}

private async findOrphanedImages(): Promise<ImageVariant[]> {

return this.db.imageVariants.findMany({

where: {

coverId: { notIn: this.db.covers.findMany().map(c => c.id) },

createdAt: { lt: new Date(Date.now() - 30 * 24 * 60 * 60 * 1000) } // 30 days old

}

});

}

}

This ensures our storage doesn't get bloated with unused junk.

What we ended up with is a scalable image system that:

This setup has given us a lot more flexibility going forward—whether we need new sizes, new formats, or smarter delivery logic.

Have you built similar systems? I'd love to hear about your approaches and challenges. You can also find me on X (Twitter) @mittalyashu77.

Product

Trust & Legal